In the last year Oracle has changed a lot, moving with determination to the Cloud business. They increased their portfolio with IaaS, PaaS and SaaS solutions. In the context of Openlab collaboration between Oracle and CERN we have been testing some of these cloud solutions. Oracle Cloud Infrastructure ( OCI ) is one of these and in this post I'm gonna show how it is possible to install and run a Kubernetes Cluster in the Oracle Cloud Infrastructure.

Prerequisites

- Account on the Oracle Cloud Infrastructure

- Install Kubectl

- Install Terraform

- Create a directory :

mkdir /home/user/.terraform.d/plugins/

- Download and install in the previous directory the OCI Terraform Provider

- Create a Terraform configuration file in /home/user/.terraform.rc that specifies the path to the OCI Terraform Provider :

providers { oci = "/home/user/.terraform.d/plugins/terraform-provider-oci" }

Installation

There are few steps to perform :

- Create private and public keys

- Download and configure the Terraform Kubernetes Installer

- Create the Kubernetes Cluster

Create private and public keys

First of all you need to define a private key for your OCI account.

So let's make a directory to store your keys

$ mkdir /home/user/.oci

You can create a key without password:

$ openssl genrsa -out ~/.oci/oci_api_key.pem 2048

Or with

$ openssl genrsa -out ~/.oci/oci_api_key.pem -aes128 2048

Get the public key

$ openssl rsa -pubout -in ~/.oci/oci_api_key.pem -out ~/.oci/oci_api_key_public.pem

And convert it to the ssh format :

$ ssh-keygen -f oci_api_key.pem -y > oci_api_key.pub

Now upload this key to your OCI account.

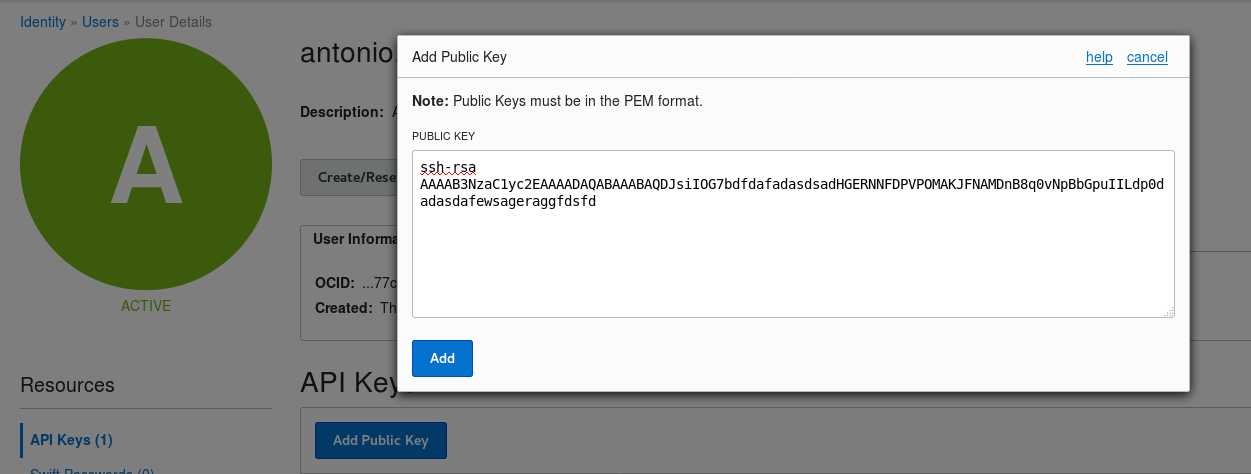

In the top right you have your username, type it and go to User settings. There you can add a public key like in the figure :

Download and configure the Terraform Kubernetes Installer

Clone the repository, move in and copy the file :

$ cp terraform.example.tfvars terraform.tfvars

Now let's fill the file with what we need. In the section BMCS Service we need to put the information about our tenant and account OCI. These are the value to add :

- tenancy_ocid : you can find it on the bottom left

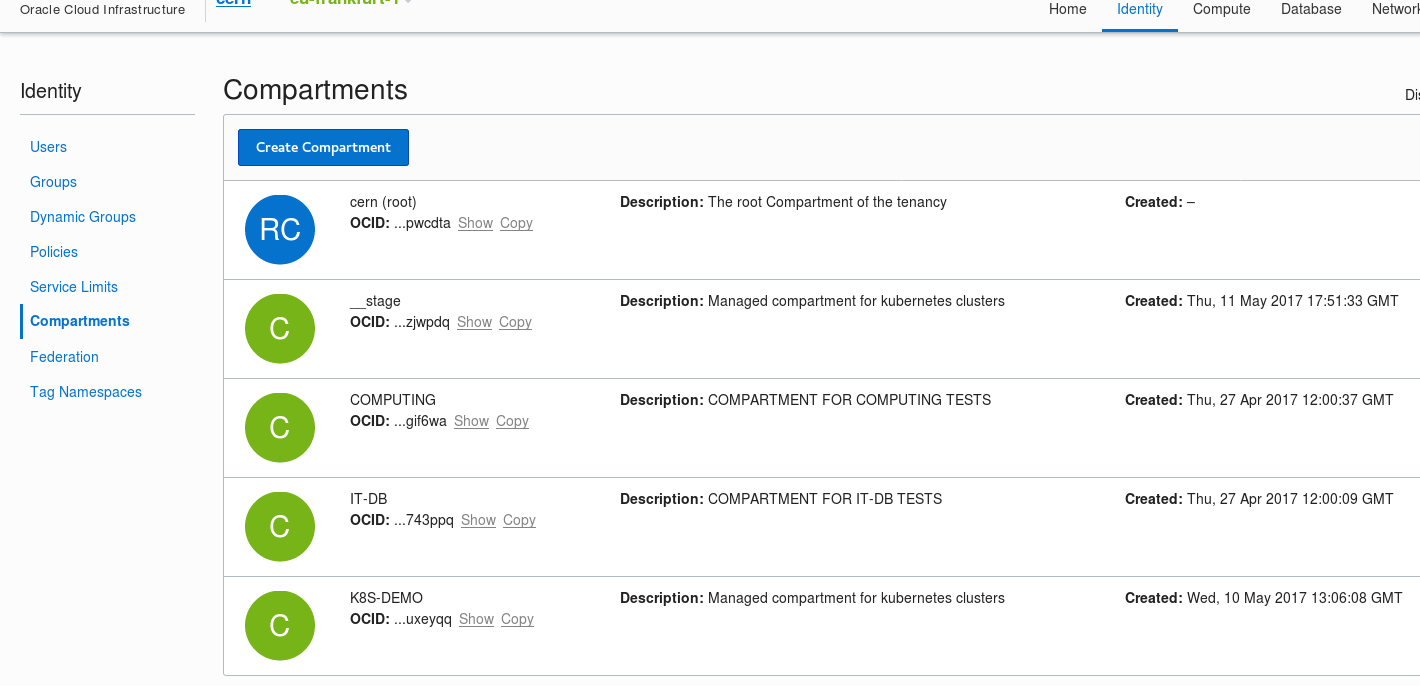

- compartment_ocid : in the tab Identity under Compartments

- user_ocid : in the tab Identity under User

- fingerprint : this value is the one that you get when you upload your public key

- private_key_path = the one defined before /home/user/.oci/oci_api_key.pem

- region = you can find in the top left

If you want to enable the ssh to etcd, master and worker nodes you just need to comment out these lines :

etcd_ssh_ingress = "10.0.0.0/16"

etcd_ssh_ingress = "0.0.0.0/0"

master_ssh_ingress = "0.0.0.0/0"

worker_ssh_ingress = "0.0.0.0/0"

To open the NodePort on the worker comment out :

worker_nodeport_ingress = "0.0.0.0/0"

Be careful that with these rules you are opening the ssh and nodeport to all world

Create the Kubernetes Cluster

Once you filled the terraform.tfvars you can create the cluster with :

$ terraform plan -out YOUR_PLAN_NAME

and apply

$ terraform apply YOUR_PLAN_NAME

To check that the cluster is running property just run the script

$ scripts/cluster-check.sh

If everything is ok it will display something like that

The Kubernetes cluster is up and appears to be healthy.

Kubernetes master is running at https://PUBLIC_IP:443

KubeDNS is running at https://PUBLIC_IP:443/api/v1/namespaces/kube-system/services/kube-dns/proxy

Now if you want that your Kubectl points directly to your new cluster you just need to copy the file :

$ cp generated/kubeconfig ~/.kube/config

Your cluster is up and running!

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-ad1-0.k8smasterad1.k8sbmcs.oraclevcn.com Ready master 1h v1.8.5

k8s-worker-ad1-0.k8sworkerad1.k8sbmcs.oraclevcn.com Ready node 1h v1.8.5

If you want to ssh your servers in OCI just do this :

$ terraform output ssh_private_key > private key

$ terraform output worker_public_ips

$ ssh -i private.key opc@WORKER_PUBLIC_IP

Will this work with ubuntu linux 16.04 OR will I need virtual box+minikube ... and then your steps?